AWS Certified Solutions Architect – Professional Dumps

AWS Certified Solutions Architect – Professional is a hot AWS Certification exam pursuing by lots of people. In order to help you pass this AWS Certified Solutions Architect – Professional exam, PassQuestion offers the latest and valid AWS Certified Solutions Architect – Professional Dumps which contain most up-to-date questions with correct answers to help you achieve success, we ensure 100% passing in your first attempt.

AWS Certified Solutions Architect – Professional Certification Exam

The AWS Certified Solutions Architect – Professional exam is intended for individuals who perform a solutions architect role with two or more years of hands-on experience managing and operating systems on AWS. We recommend that individuals have two or more years of hands-on experience designing and deploying cloud architecture on AWS before taking this exam.

Abilities Validated by the Certification

Design and deploy dynamically scalable, highly available, fault-tolerant, and reliable applications on AWS

Select appropriate AWS services to design and deploy an application based on given requirements

Migrate complex, multi-tier applications on AWS

Design and deploy enterprise-wide scalable operations on AWS

Implement cost-control strategies

Exam Details

Format: Multiple choice, multiple answer

Type: Professional

Delivery Method: Testing center or online proctored exam

Time: 180 minutes to complete the exam

Cost: 300 USD (Practice Exam: 40 USD)

Language: Available in English, Japanese, Korean, and Simplified Chinese

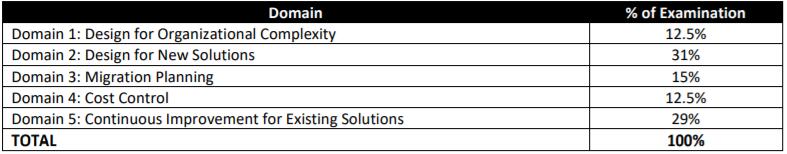

AWS Certified Solutions Architect – Professional Exam Content

View Online AWS Certified Solutions Architect – Professional Free Questions

1.Your company policies require encryption of sensitive data at rest. You are considering the possible options for protecting data while storing it at rest on an EBS data volume, attached to an EC2 instance.

Which of these options would allow you to encrypt your data at rest? (Choose 3)

A. Implement third party volume encryption tools

B. Implement SSL/TLS for all services running on the server

C. Encrypt data inside your applications before storing it on EBS

D. Encrypt data using native data encryption drivers at the file system level

E. Do nothing as EBS volumes are encrypted by default

Answer: ACD

2.A customer is deploying an SSL enabled web application to AWS and would like to implement a separation of roles between the EC2 service administrators that are entitled to login to instances as well as making API calls and the security officers who will maintain and have exclusive access to the application’s X.509 certificate that contains the private key.

A. Upload the certificate on an S3 bucket owned by the security officers and accessible only by EC2 Role of the web servers.

B. Configure the web servers to retrieve the certificate upon boot from an CloudHSM is managed by the security officers.

C. Configure system permissions on the web servers to restrict access to the certificate only to the authority security officers

D. Configure IAM policies authorizing access to the certificate store only to the security officers and terminate SSL on an ELB.

Answer: D

3.You have recently joined a startup company building sensors to measure street noise and air quality in urban areas. The company has been running a pilot deployment of around 100 sensors for 3 months each sensor uploads 1KB of sensor data every minute to a backend hosted on AWS. During the pilot, you measured a peak or 10 IOPS on the database, and you stored an average of 3GB of sensor data per month in the database. The current deployment consists of a load-balanced auto scaled Ingestion layer using EC2 instances and a PostgreSQL RDS database with 500GB standard storage. The pilot is considered a success and your CEO has managed to get the attention or some potential investors. The business plan requires a deployment of at least 100K sensors which needs to be supported by the backend. You also need to store sensor data for at least two years to be able to compare year over year Improvements. To secure funding, you have to make sure that the platform meets these requirements and leaves room for further scaling.

Which setup win meet the requirements?

A. Add an SQS queue to the ingestion layer to buffer writes to the RDS instance

B. Ingest data into a DynamoDB table and move old data to a Redshift cluster

C. Replace the RDS instance with a 6 node Redshift cluster with 96TB of storage

D. Keep the current architecture but upgrade RDS storage to 3TB and 10K provisioned IOPS

Answer: C

4.A web company is looking to implement an intrusion detection and prevention system into their deployed VPC. This platform should have the ability to scale to thousands of instances running inside of the VPC.

How should they architect their solution to achieve these goals?

A. Configure an instance with monitoring software and the elastic network interface (ENI) set to promiscuous mode packet sniffing to see an traffic across the VPC.

B. Create a second VPC and route all traffic from the primary application VPC through the second VPC where the scalable virtualized IDS/IPS platform resides.

C. Configure servers running in the VPC using the host-based 'route' commands to send all traffic through the platform to a scalable virtualized IDS/IPS.

D. Configure each host with an agent that collects all network traffic and sends that traffic to the IDS/IPS platform for inspection.

Answer: D

5.A company is storing data on Amazon Simple Storage Service (S3). The company's security policy mandates that data is encrypted at rest.

Which of the following methods can achieve this? (Choose 3)

A. Use Amazon S3 server-side encryption with AWS Key Management Service managed keys.

B. Use Amazon S3 server-side encryption with customer-provided keys.

C. Use Amazon S3 server-side encryption with EC2 key pair.

D. Use Amazon S3 bucket policies to restrict access to the data at rest.

E. Encrypt the data on the client-side before ingesting to Amazon S3 using their own master key.

F. Use SSL to encrypt the data while in transit to Amazon S3.

Answer: ABE

6.Your firm has uploaded a large amount of aerial image data to S3. In the past, in your on-premises environment, you used a dedicated group of servers to oaten process this data and used Rabbit MQ - An open source messaging system to get job information to the servers. Once processed the data would go to tape and be shipped offsite. Your manager told you to stay with the current design, and leverage AWS archival storage and messaging services to minimize cost.

Which is correct?

A. Use SQS for passing job messages use Cloud Watch alarms to terminate EC2 worker instances when they become idle. Once data is processed, change the storage class of the S3 objects to Reduced Redundancy Storage.

B. Setup Auto-Scaled workers triggered by queue depth that use spot instances to process messages in SOS Once data is processed, change the storage class of the S3 objects to Reduced Redundancy Storage.

C. Setup Auto-Scaled workers triggered by queue depth that use spot instances to process messages in SQS Once data is processed, change the storage class of the S3 objects to Glacier.

D. Use SNS to pass job messages use Cloud Watch alarms to terminate spot worker instances when they become idle. Once data is processed, change the storage class of the S3 object to Glacier.

Answer: C

- TOP 50 Exam Questions

-

Exam

All copyrights reserved 2026 PassQuestion NETWORK CO.,LIMITED. All Rights Reserved.