AWS Certified Data Analytics – Specialty DAS-C01 Dumps

Preparing for the AWS Certified Big Data – Specialty exam? AWS Certified Data Analytics Specialty exam is the new name given to the retired AWS Certified Big Data Specialty exam. PassQuestion new released AWS Certified Data Analytics – Specialty DAS-C01 Dumps provide you confidence to pass through the certification exam in first attempt with a maximum score. PassQuestion DAS-C01 questions and answers are enough to help you pass the exam easily.

AWS Certified Data Analytics – Specialty (DAS-C01) Exam Description

The AWS Certified Data Analytics - Specialty (DAS-C01) examination is intended for individuals who perform in a data analytics-focused role. This exam validates an examinee's comprehensive understanding of using AWS services to design, build, secure, and maintain analytics solutions that provide insight from data.

It validates an examinee's ability to:

- Define AWS data analytics services and understand how they integrate with each other.

- Explain how AWS data analytics services fit in the data lifecycle of collection, storage, processing, and visualization.

Recommended AWS Knowledge

- A minimum of 5 years of experience with common data analytics technologies

- At least 2 years of hands-on experience working on AWS

- Experience and expertise working with AWS services to design, build, secure, and maintain analytics solutions

AWS Certified Data Analytics – Specialty exam

Exam code: DAS-C01

Format: Multiple-choice and multiple-response

Passing score: 750 (scale of 100-1000)

Duration: 120 minutes

Languages: English, Japanese, Korean and simplified Chinese

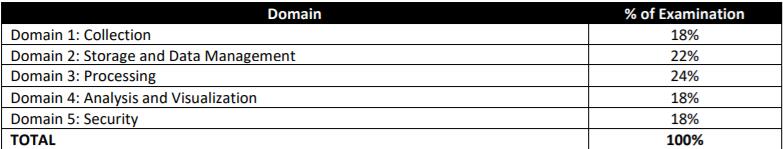

DAS-C01 Exam Content Outline

Domain 1: Collection

1.1 Determine the operational characteristics of the collection system

1.2 Select a collection system that handles the frequency, volume, and source of data

1.3 Select a collection system that addresses the key properties of data, such as order, format, and compression

Domain 2: Storage and Data Management

2.1 Determine the operational characteristics of a storage solution for analytics

2.2 Determine data access and retrieval patterns

2.3 Select an appropriate data layout, schema, structure, and format

2.4 Define a data lifecycle based on usage patterns and business requirements

2.5 Determine an appropriate system for cataloging data and managing metadata

Domain 3: Processing

3.1 Determine appropriate data processing solution requirements

3.2 Design a solution for transforming and preparing data for analysis

3.3 Automate and operationalize a data processing solution

Domain 4: Analysis and Visualization

4.1 Determine the operational characteristics of an analysis and visualization solution

4.2 Select the appropriate data analysis solution for a given scenario

4.3 Select the appropriate data visualization solution for a given scenario

Domain 5: Security

5.1 Select appropriate authentication and authorization mechanisms

5.2 Apply data protection and encryption techniques

5.3 Apply data governance and compliance controls

View Online AWS Certified Data Analytics – Specialty DAS-C01 Free Questions

1.A financial services company needs to aggregate daily stock trade data from the exchanges into a data store. The company requires that data be streamed directly into the data store, but also occasionally allows data to be modified using SQL. The solution should integrate complex, analytic queries running with minimal latency. The solution must provide a business intelligence dashboard that enables viewing of the top contributors to anomalies in stock prices.

Which solution meets the company’s requirements?

A. Use Amazon Kinesis Data Firehose to stream data to Amazon S3. Use Amazon Athena as a data source for Amazon QuickSight to create a business intelligence dashboard.

B. Use Amazon Kinesis Data Streams to stream data to Amazon Redshift. Use Amazon Redshift as a data source for Amazon QuickSight to create a business intelligence dashboard.

C. Use Amazon Kinesis Data Firehose to stream data to Amazon Redshift. Use Amazon Redshift as a data source for Amazon QuickSight to create a business intelligence dashboard.

D. Use Amazon Kinesis Data Streams to stream data to Amazon S3. Use Amazon Athena as a data source for Amazon QuickSight to create a business intelligence dashboard.

Answer: D

2.A financial company hosts a data lake in Amazon S3 and a data warehouse on an Amazon Redshift cluster. The company uses Amazon QuickSight to build dashboards and wants to secure access from its on-premises Active Directory to Amazon QuickSight.

How should the data be secured?

A. Use an Active Directory connector and single sign-on (SSO) in a corporate network environment.

B. Use a VPC endpoint to connect to Amazon S3 from Amazon QuickSight and an IAM role to authenticate Amazon Redshift.

C. Establish a secure connection by creating an S3 endpoint to connect Amazon QuickSight and a VPC endpoint to connect to Amazon Redshift.

D. Place Amazon QuickSight and Amazon Redshift in the security group and use an Amazon S3 endpoint to connect Amazon QuickSight to Amazon S3.

Answer: B

3.A real estate company has a mission-critical application using Apache HBase in Amazon EMR. Amazon EMR is configured with a single master node. The company has over 5 TB of data stored on an Hadoop Distributed File System (HDFS). The company wants a cost-effective solution to make its HBase data highly available.

Which architectural pattern meets company’s requirements?

A. Use Spot Instances for core and task nodes and a Reserved Instance for the EMR master node. Configure the EMR cluster with multiple master nodes. Schedule automated snapshots using Amazon EventBridge.

B. Store the data on an EMR File System (EMRFS) instead of HDFS. Enable EMRFS consistent view.

Create an EMR HBase cluster with multiple master nodes. Point the HBase root directory to an

Amazon S3 bucket.

C. Store the data on an EMR File System (EMRFS) instead of HDFS and enable EMRFS consistent view. Run two separate EMR clusters in two different Availability Zones. Point both clusters to the same HBase root directory in the same Amazon S3 bucket.

D. Store the data on an EMR File System (EMRFS) instead of HDFS and enable EMRFS consistent view. Create a primary EMR HBase cluster with multiple master nodes. Create a secondary EMR HBase read-replica cluster in a separate Availability Zone. Point both clusters to the same HBase root directory in the same Amazon S3 bucket.

Answer: C

4.A software company hosts an application on AWS, and new features are released weekly. As part of the application testing process, a solution must be developed that analyzes logs from each Amazon EC2 instance to ensure that the application is working as expected after each deployment. The collection and analysis solution should be highly available with the ability to display new information with minimal delays.

Which method should the company use to collect and analyze the logs?

A. Enable detailed monitoring on Amazon EC2, use Amazon CloudWatch agent to store logs in Amazon S3, and use Amazon Athena for fast, interactive log analytics.

B. Use the Amazon Kinesis Producer Library (KPL) agent on Amazon EC2 to collect and send data to Kinesis Data Streams to further push the data to Amazon Elasticsearch Service and visualize using Amazon QuickSight.

C. Use the Amazon Kinesis Producer Library (KPL) agent on Amazon EC2 to collect and send data to Kinesis Data Firehose to further push the data to Amazon Elasticsearch Service and Kibana.

D. Use Amazon CloudWatch subscriptions to get access to a real-time feed of logs and have the logs delivered to Amazon Kinesis Data Streams to further push the data to Amazon Elasticsearch Service and Kibana.

Answer: D

5.A data analyst is using AWS Glue to organize, cleanse, validate, and format a 200 GB dataset. The data analyst triggered the job to run with the Standard worker type. After 3 hours, the AWS Glue job status is still RUNNING. Logs from the job run show no error codes. The data analyst wants to improve the job execution time without overprovisioning.

Which actions should the data analyst take?

A. Enable job bookmarks in AWS Glue to estimate the number of data processing units (DPUs). Based on the profiled metrics, increase the value of the executor-cores job parameter.

B. Enable job metrics in AWS Glue to estimate the number of data processing units (DPUs). Based on the profiled metrics, increase the value of the maximum capacity job parameter.

C. Enable job metrics in AWS Glue to estimate the number of data processing units (DPUs). Based on the profiled metrics, increase the value of the spark.yarn.executor.memoryOverhead job parameter.

D. Enable job bookmarks in AWS Glue to estimate the number of data processing units (DPUs). Based on the profiled metrics, increase the value of the num-executors job parameter.

Answer: B

- TOP 50 Exam Questions

-

Exam

All copyrights reserved 2025 PassQuestion NETWORK CO.,LIMITED. All Rights Reserved.